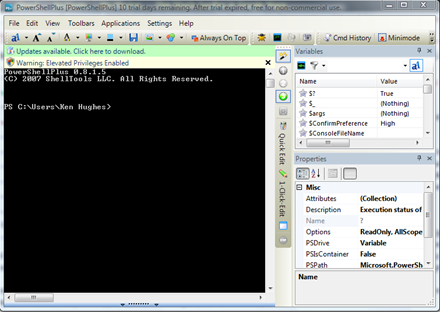

One of my colleagues switched me on to PowerShell Plus and I’m loving it.

Code editor, snippets, values of variables, logging tools and much more, including a really neat feature called ‘MiniMode’ (see the toolbar icon at the extreme right in the image.

Code editor, snippets, values of variables, logging tools and much more, including a really neat feature called ‘MiniMode’ (see the toolbar icon at the extreme right in the image.

This ‘MiniMode’ closes all toolbars/toolwindows except the main console but also makes the console window transparent (user configurable level of transparency). This mode is real easy to work with…

There is a free single user license for non commercial use.

I encourage you to try it out.